Tokenizer class PhobertTokenizerFast does not exist or is not currently imported. · Issue #25 · VinAIResearch/PhoBERT · GitHub

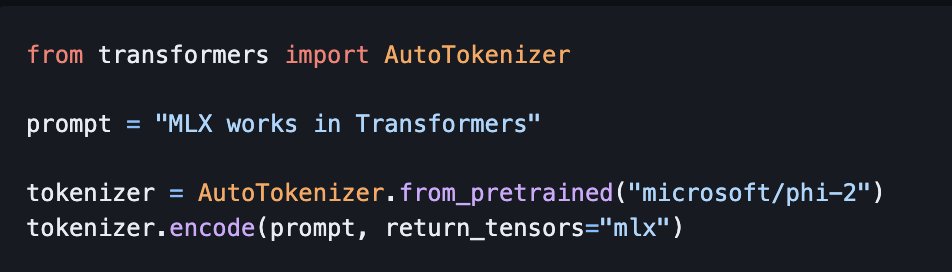

Awni Hannun on X: "It's happening: 🤗 Hugging Face's Transformers got some MLX support! - Tokenize directly to MLX arrays - Load MLX formatted safetensors to use with Transformers Release notes: https://t.co/2a4CyxQILI

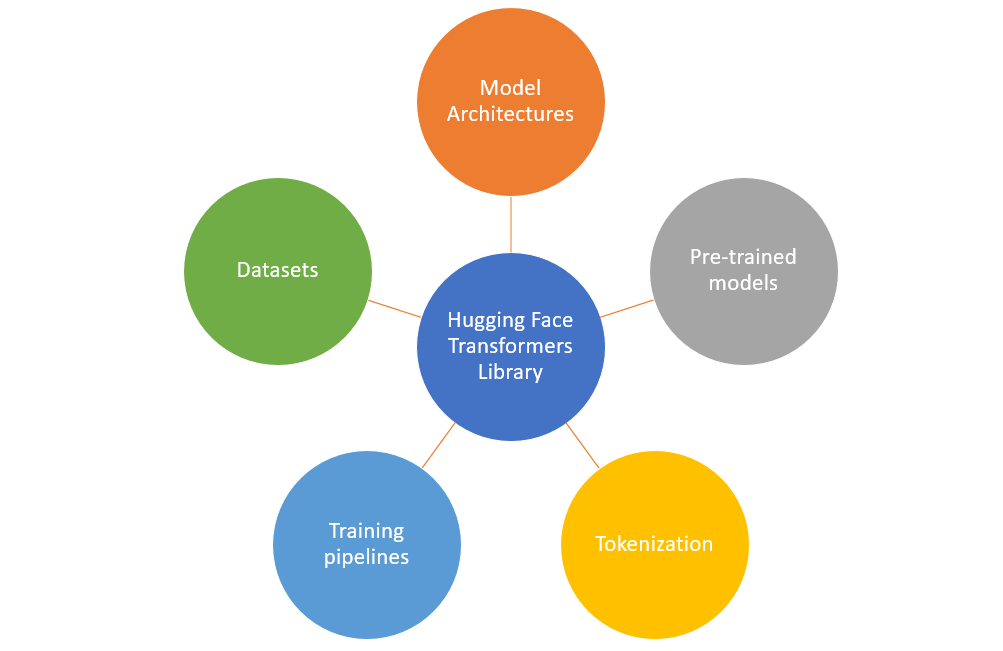

Diving Deep with Hugging Face: The GitHub of Deep Learning & Large Language Models! | by Senthil E | Level Up Coding

Hugging Face on X: "Transformers v4.20.0 is out with nine new model architectures 🤯 and support for big model inference. New models: 🔠BLOOM, GPT Neo-X, LongT5 👁️CvT, LeViT 📄LayoutLMv3 🔊M-CTC-T, Wav2Vec2-Conformer 🖥️Trajectory

Not work cache_dir of AutoTokenizer.from_pretrained('gpt2') · Issue #22825 · huggingface/transformers · GitHub

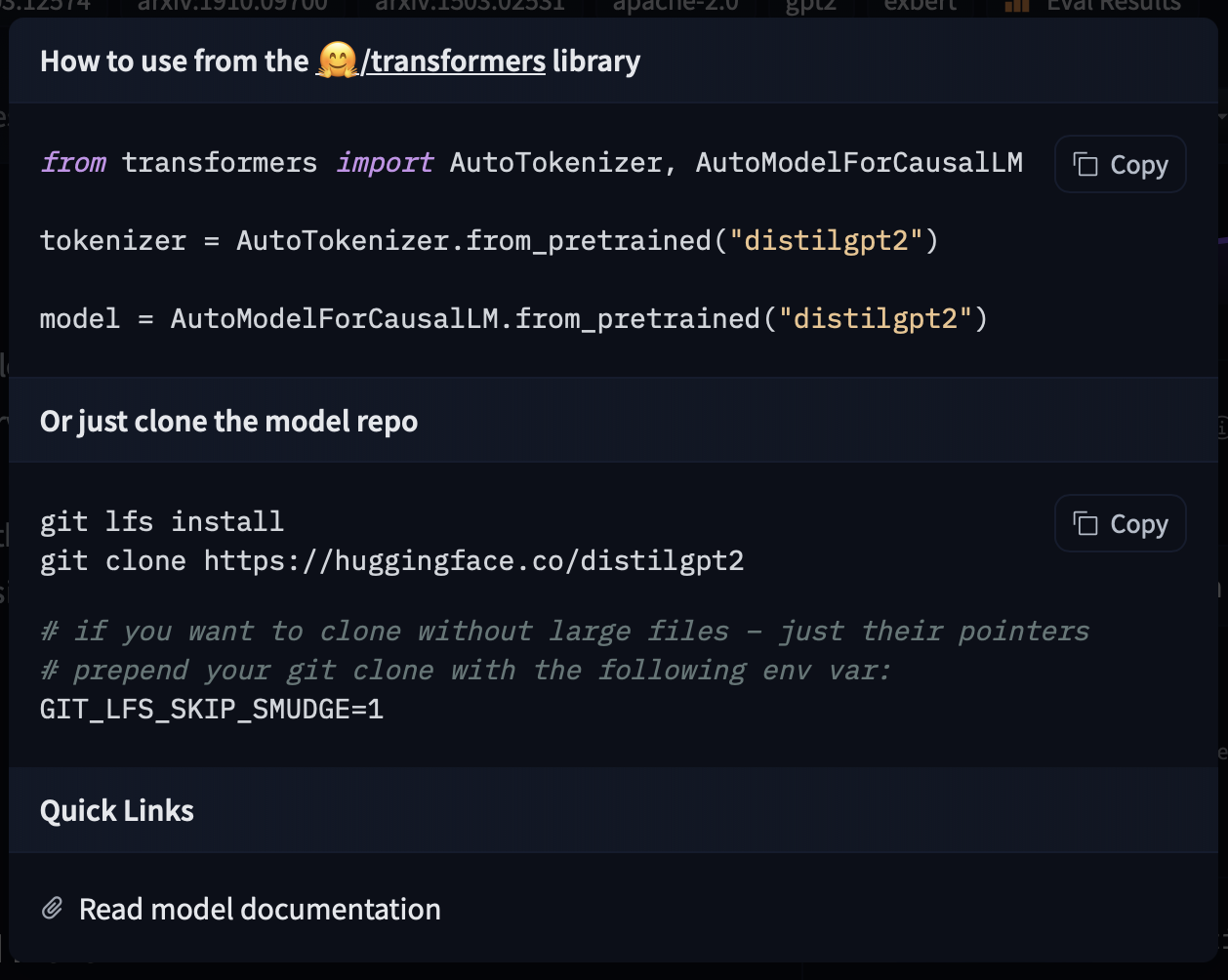

Vaibhav (VB) Srivastav on X: "You can also use it directly with Transformers too! from transformers import AutoTokenizer, AutoModelForCausalLM, GenerationConfig import torch import torchaudio import re from string import Template prompt_template =

ImportError: cannot import name 'AutoModelForSeq2SeqLMM' from 'transformers' - Generative AI with Large Language Models - DeepLearning.AI

Fine-tuning BERT model for arbitrarily long texts, Part 1 - MIM Solutions - We make artificial intelligence work for you

tensorflow - Problem with inputs when building a model with TFBertModel and AutoTokenizer from HuggingFace's transformers - Stack Overflow

Vaibhav (VB) Srivastav on X: "Use in Transformers: from transformers import AutoTokenizer, AutoModelForCausalLM import torch tokenizer = AutoTokenizer.from_pretrained("google/gemma-1.1-7b-it") model = AutoModelForCausalLM.from_pretrained( "google/gemma ...