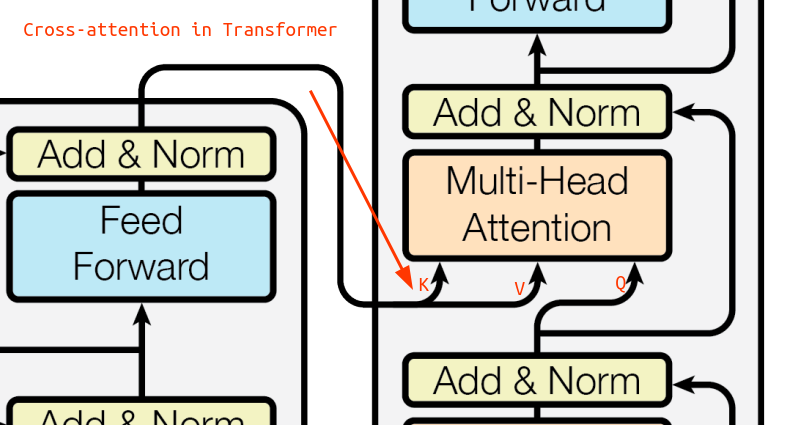

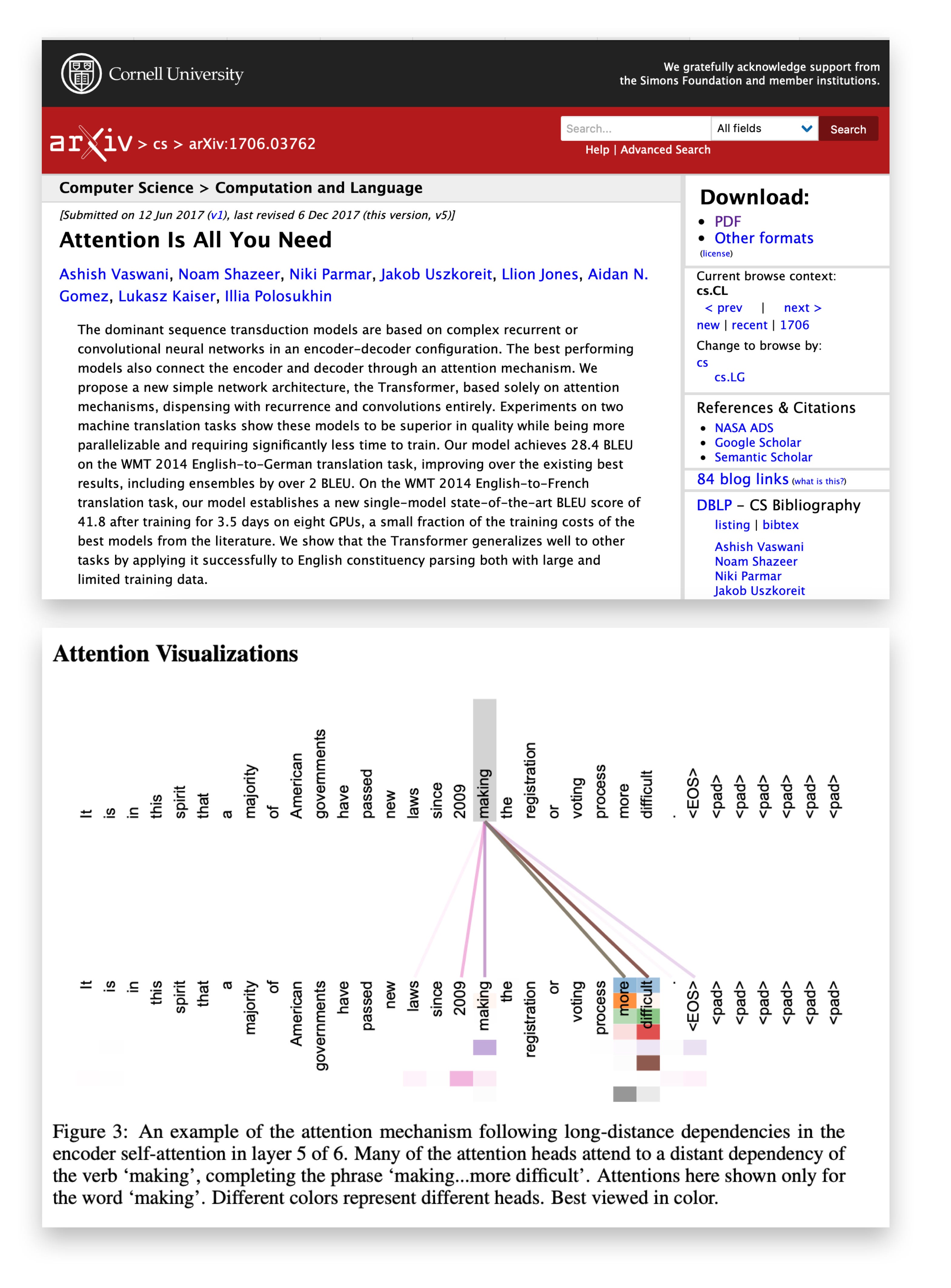

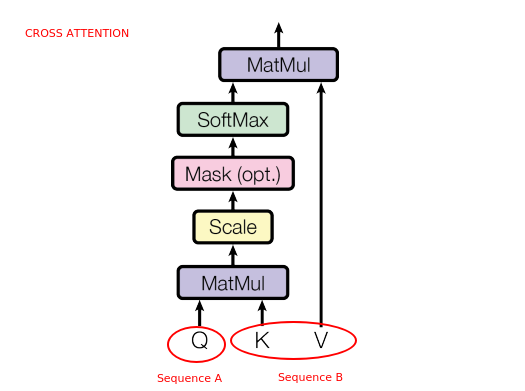

The architecture of self-attention module and cross-attention module. R... | Download Scientific Diagram

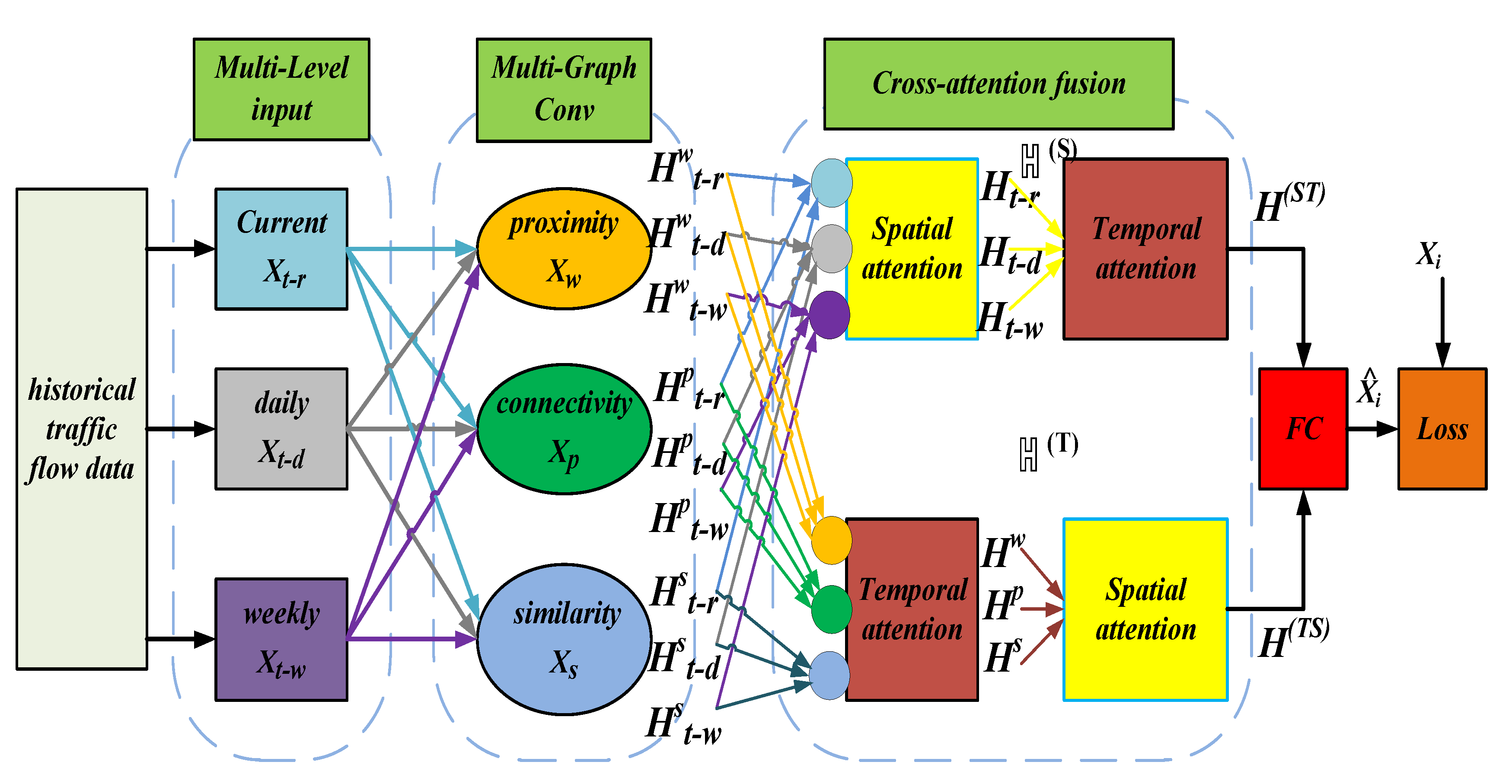

Sensors | Free Full-Text | Cross-Attention Fusion Based Spatial-Temporal Multi-Graph Convolutional Network for Traffic Flow Prediction

Cross-attention PHV: Prediction of human and virus protein-protein interactions using cross-attention–based neural networks - ScienceDirect

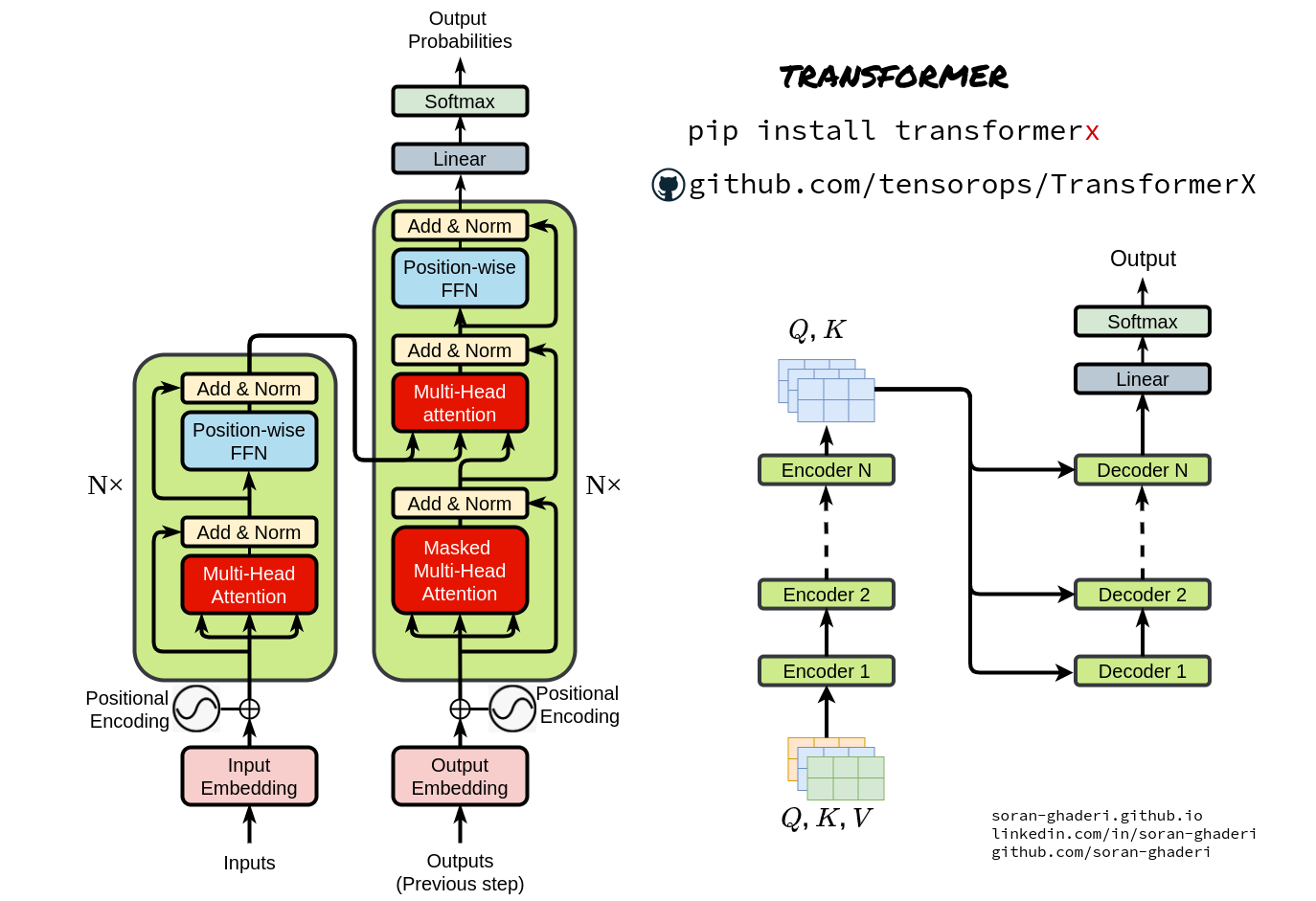

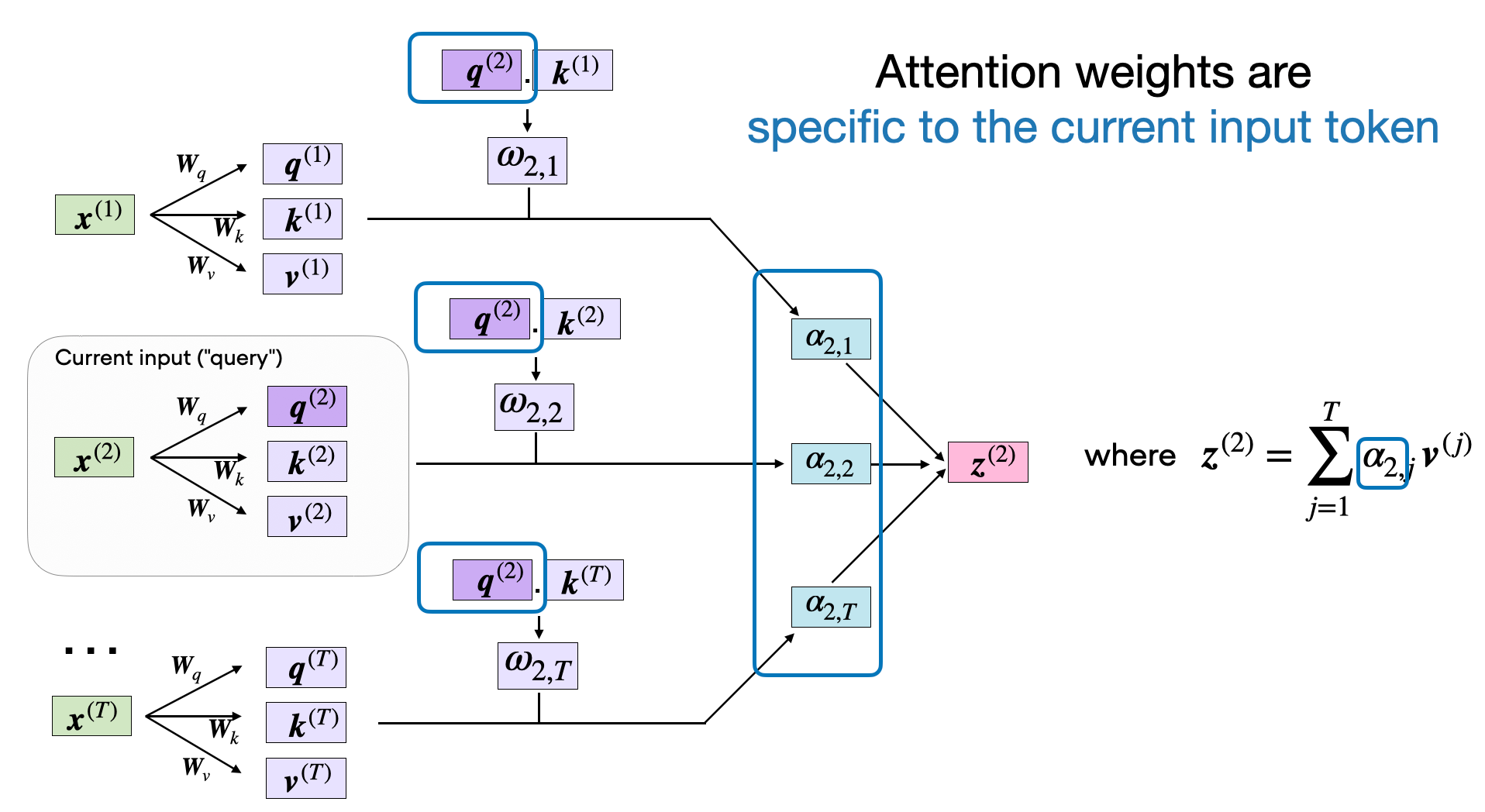

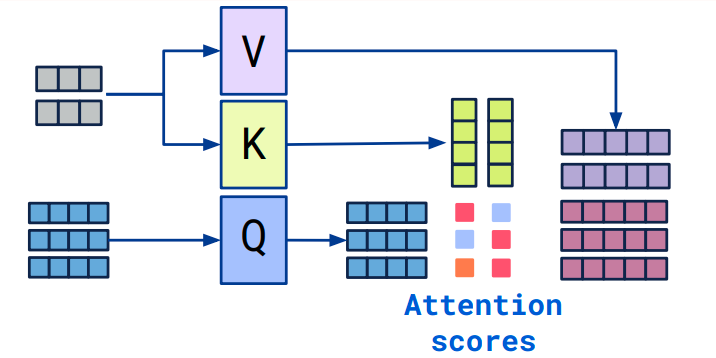

Understanding and Coding Self-Attention, Multi-Head Attention, Cross- Attention, and Causal-Attention in LLMs

GitHub - rishikksh20/CrossViT-pytorch: Implementation of CrossViT: Cross- Attention Multi-Scale Vision Transformer for Image Classification

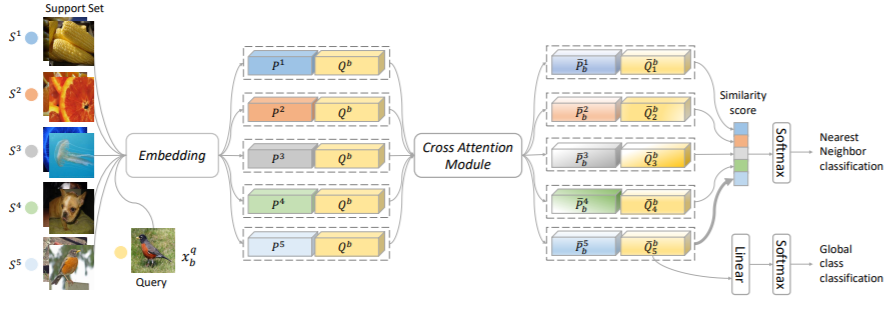

CrossViT: Cross-Attention Multi-Scale Vision Transformer for Image Classification | Papers With Code

Remote Sensing | Free Full-Text | MMCAN: Multi-Modal Cross-Attention Network for Free-Space Detection with Uncalibrated Hyperspectral Sensors

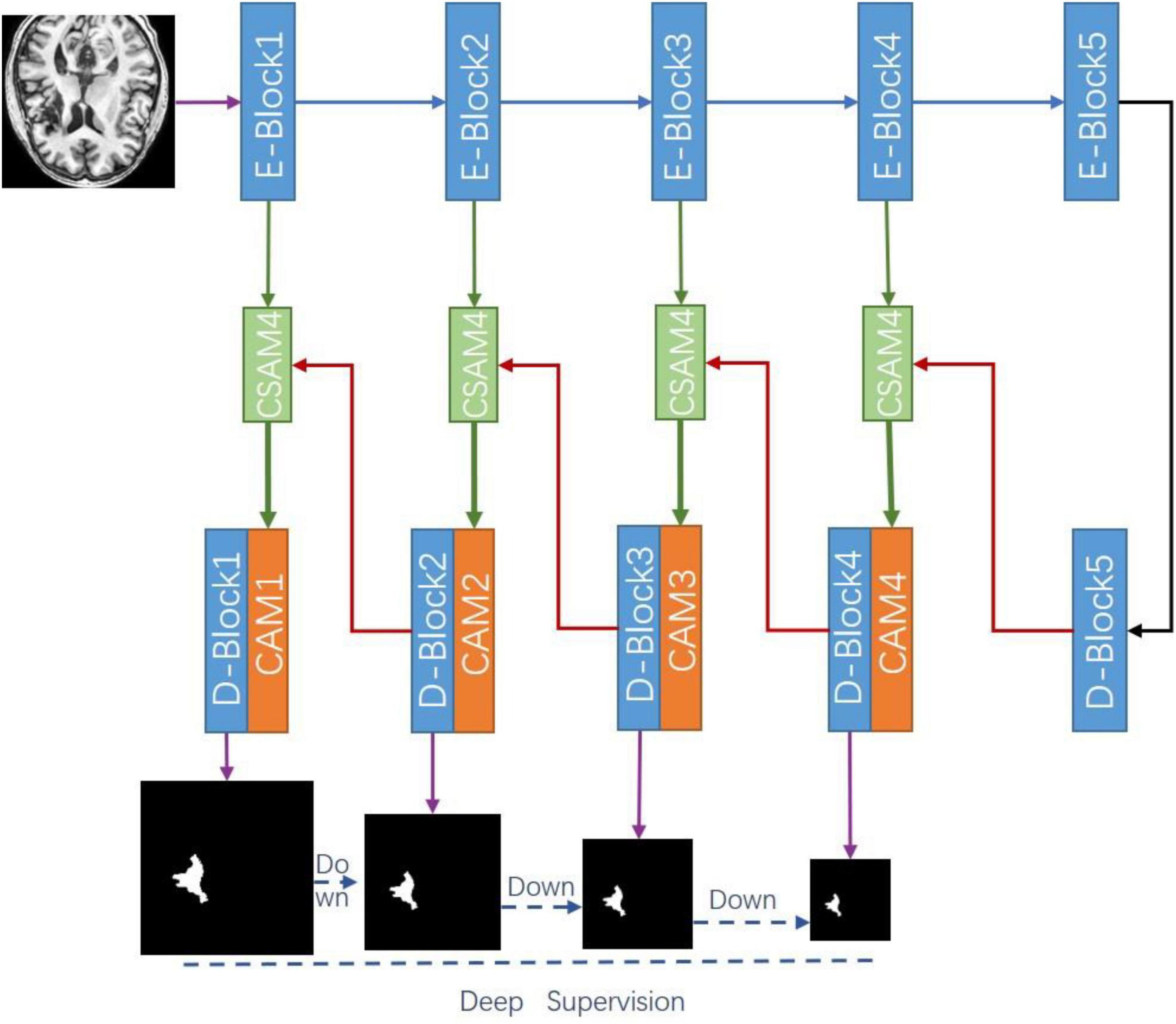

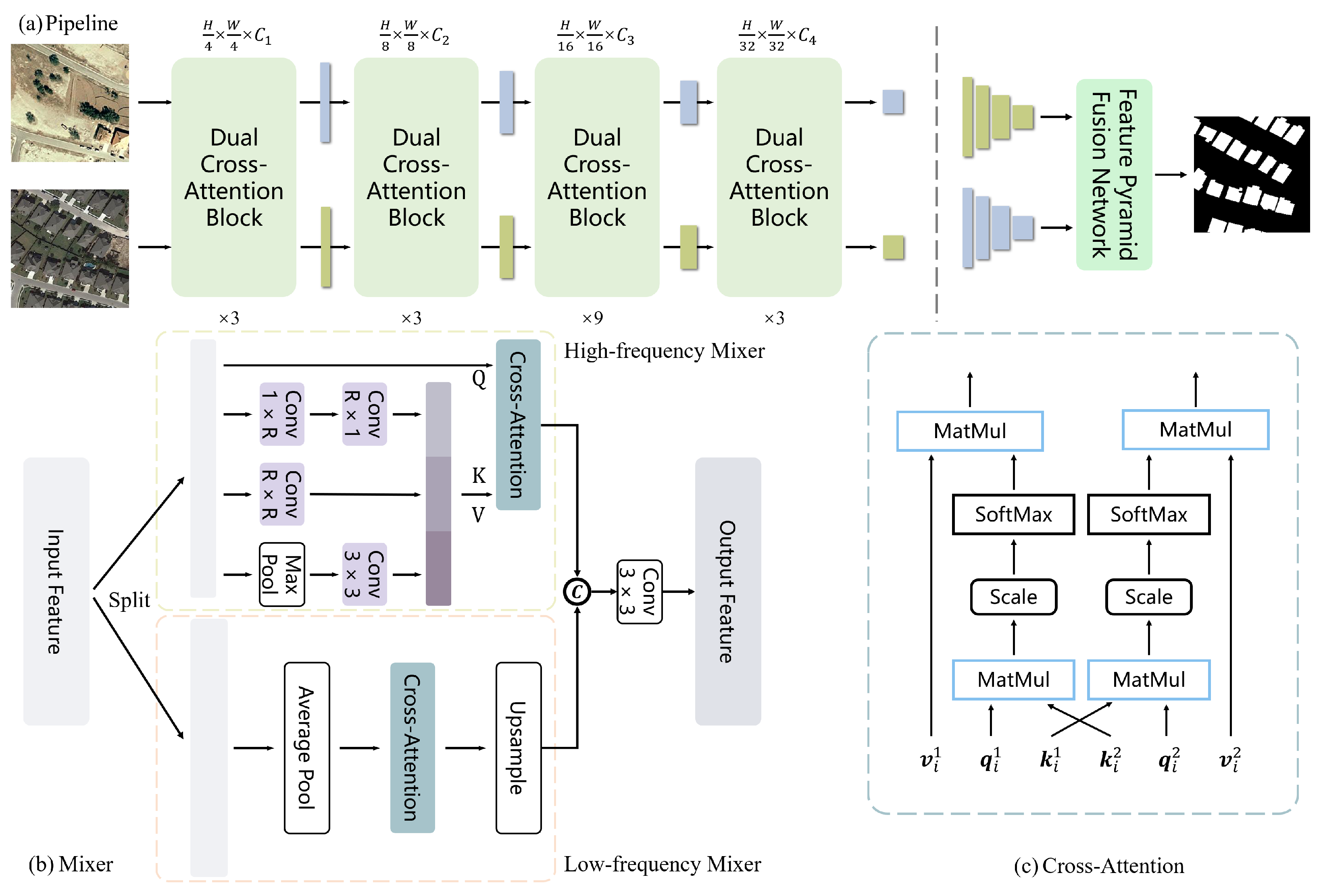

CASF-Net: Cross-attention and cross-scale fusion network for medical image segmentation - ScienceDirect

Cross-attention multi-branch network for fundus diseases classification using SLO images - ScienceDirect

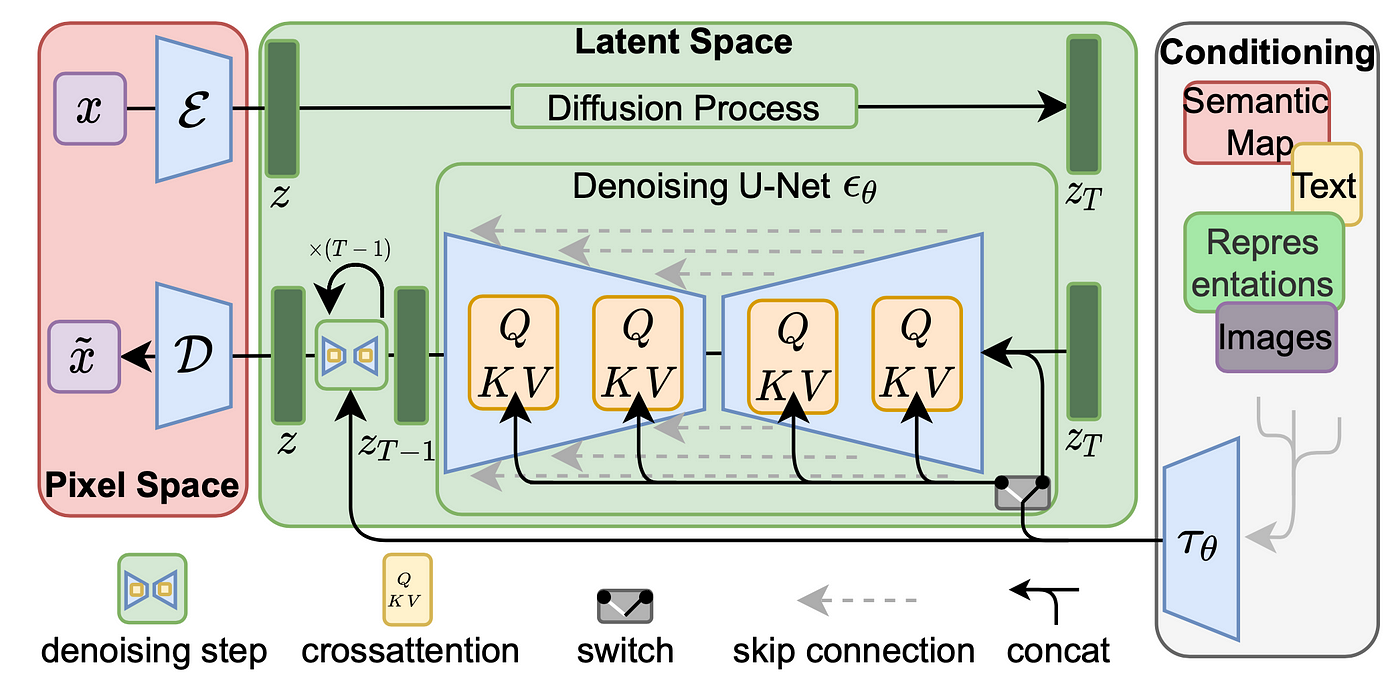

Understanding and Coding Self-Attention, Multi-Head Attention, Cross- Attention, and Causal-Attention in LLMs

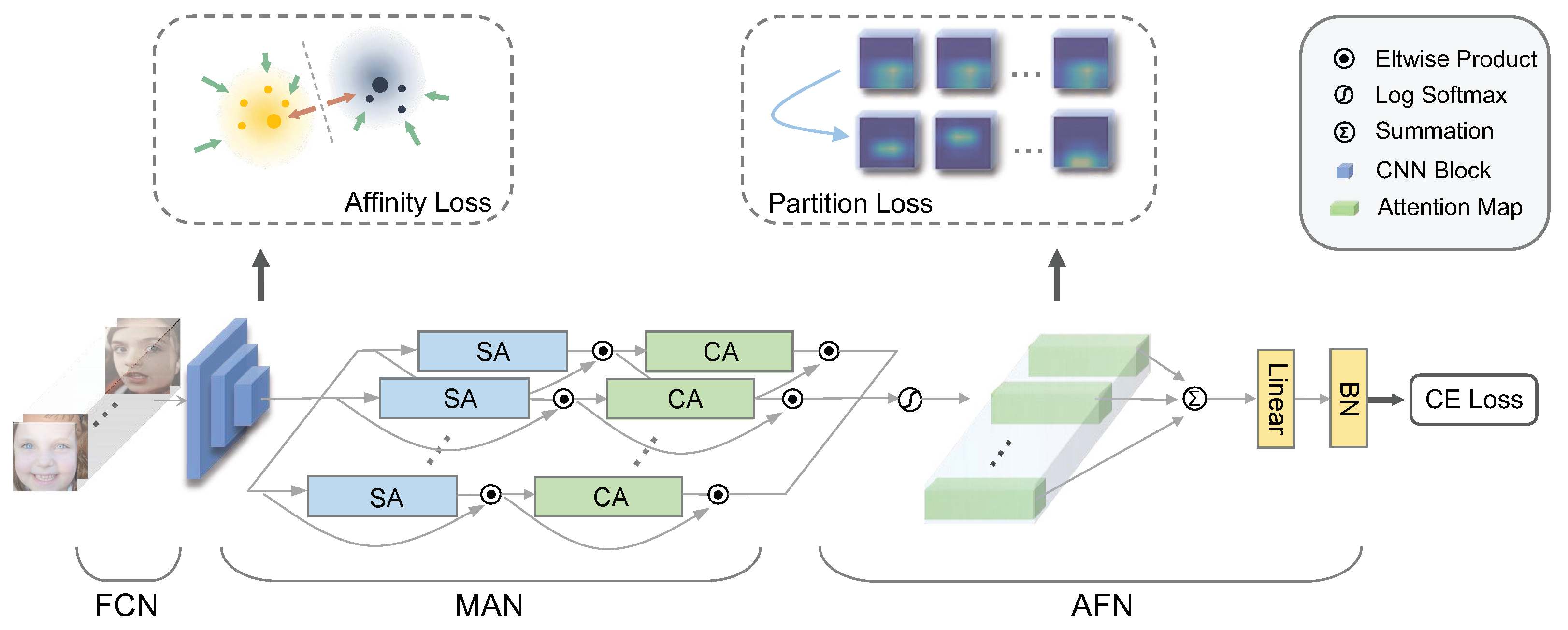

Biomimetics | Free Full-Text | Distract Your Attention: Multi-Head Cross Attention Network for Facial Expression Recognition

Understanding and Coding Self-Attention, Multi-Head Attention, Cross- Attention, and Causal-Attention in LLMs

![Notes] Understanding XCiT - Part 1 · Veritable Tech Blog Notes] Understanding XCiT - Part 1 · Veritable Tech Blog](https://blog.ceshine.net/post/xcit-part-1/figure-1.png)